#Memory Retrieval AI Systems

Explore tagged Tumblr posts

Text

Memory Retrieval AI Systems | Hyperthymesia.ai

Enhance your information retrieval processes with our Memory Retrieval AI Systems in the USA. Our systems use sophisticated AI technologies to improve data access and recall, providing precise and reliable results. Learn more about these innovative systems at Hyperthymesia.ai.

Memory Retrieval AI Systems

0 notes

Text

Memory Retrieval AI Systems | Hyperthymesia.ai

Enhance your information retrieval processes with our Memory Retrieval AI Systems in the USA. Our systems use sophisticated AI technologies to improve data access and recall, providing precise and reliable results. Learn more about these innovative systems at Hyperthymesia.ai.

Memory Retrieval AI Systems

0 notes

Text

KIOXIA Unveils 122.88TB LC9 Series NVMe SSD to Power Next-Gen AI Workloads

KIOXIA America, Inc. has announced the upcoming debut of its LC9 Series SSD, a new high-capacity enterprise solid-state drive (SSD) with 122.88 terabytes (TB) of storage, purpose-built for advanced AI applications. Featuring the company’s latest BiCS FLASH™ generation 8 3D QLC (quad-level cell) memory and a fast PCIe® 5.0 interface, this cutting-edge drive is designed to meet the exploding data demands of artificial intelligence and machine learning systems.

As enterprises scale up AI workloads—including training large language models (LLMs), handling massive datasets, and supporting vector database queries—the need for efficient, high-density storage becomes paramount. The LC9 SSD addresses these needs with a compact 2.5-inch form factor and dual-port capability, providing both high capacity and fault tolerance in mission-critical environments.

Form factor refers to the physical size and shape of the drive—in this case, 2.5 inches, which is standard for enterprise server deployments. PCIe (Peripheral Component Interconnect Express) is the fast data connection standard used to link components to a system’s motherboard. NVMe (Non-Volatile Memory Express) is the protocol used by modern SSDs to communicate quickly and efficiently over PCIe interfaces.

Accelerating AI with Storage Innovation

The LC9 Series SSD is designed with AI-specific use cases in mind—particularly generative AI, retrieval augmented generation (RAG), and vector database applications. Its high capacity enables data-intensive training and inference processes to operate without the bottlenecks of traditional storage.

It also complements KIOXIA’s AiSAQ™ technology, which improves RAG performance by storing vector elements on SSDs instead of relying solely on costly and limited DRAM. This shift enables greater scalability and lowers power consumption per TB at both the system and rack levels.

“AI workloads are pushing the boundaries of data storage,” said Neville Ichhaporia, Senior Vice President at KIOXIA America. “The new LC9 NVMe SSD can accelerate model training, inference, and RAG at scale.”

Industry Insight and Lifecycle Considerations

Gregory Wong, principal analyst at Forward Insights, commented:

“Advanced storage solutions such as KIOXIA’s LC9 Series SSD will be critical in supporting the growing computational needs of AI models, enabling greater efficiency and innovation.”

As organizations look to adopt next-generation SSDs like the LC9, many are also taking steps to responsibly manage legacy infrastructure. This includes efforts to sell SSD units from previous deployments—a common practice in enterprise IT to recover value, reduce e-waste, and meet sustainability goals. Secondary markets for enterprise SSDs remain active, especially with the ongoing demand for storage in distributed and hybrid cloud systems.

LC9 Series Key Features

122.88 TB capacity in a compact 2.5-inch form factor

PCIe 5.0 and NVMe 2.0 support for high-speed data access

Dual-port support for redundancy and multi-host connectivity

Built with 2 Tb QLC BiCS FLASH™ memory and CBA (CMOS Bonded to Array) technology

Endurance rating of 0.3 DWPD (Drive Writes Per Day) for enterprise workloads

The KIOXIA LC9 Series SSD will be showcased at an upcoming technology conference, where the company is expected to demonstrate its potential role in powering the next generation of AI-driven innovation.

2 notes

·

View notes

Text

We’re Already Cyborgs—Just in Denial

When we hear the word cyborg, we tend to imagine science fiction clichés: half-human, half-metal warriors with robotic limbs, glowing eyes, and neural implants. The future of human-machine fusion is often portrayed as something dramatic, jarring, and unmistakably visible. But the truth is, the cyborg revolution has already begun—and it didn’t come with a bang. It came quietly, subtly, in the form of devices we now carry every day.

Take a look around. What’s the first thing you touch in the morning? For most of us, it’s a smartphone. What stores your memories now? What remembers your appointments, your passwords, your thoughts, your moments of inspiration, your communications, your health data, your maps, and your preferences? Not your biological brain—but your phone. We’ve already begun to externalize our cognition, emotions, and even identity into the digital realm.

This isn’t just dependency. This is integration. Our smartphones aren’t tools anymore—they’re extensions of ourselves. We don’t think with them; we think through them. We don’t store knowledge as we used to—we store the path to knowledge, trusting algorithms to retrieve the answer when needed. They’re prosthetics for the mind, and the more they evolve, the more we adapt our behaviors, thoughts, and social structures around them.

This is the essence of what it means to be a cyborg: a being that blends biological and technological systems. We may not have neural implants (yet), but we do have digital limbs—always within reach, always on, always shaping our decisions. The line between human and machine is already blurred. It’s just not visually obvious.

The picture of the cyborg has always been distorted by visual bias. Because we don’t look like robots, we assume we’re still purely biological. But the truest forms of transformation often begin invisibly—changing function before form. And that’s exactly what’s happening now. Our reliance on digital tools isn’t just convenience; it’s a step in evolution. We are extending our species into new realms of thought, memory, and communication—at the cost of forgetting that we’re even changing.

Technology now plays roles in our lives that go far beyond utility. It mediates our relationships. It curates our emotions. It decides what we see, what we believe, and even what we desire. Our sense of self is increasingly intertwined with machine feedback loops. Your digital footprint may know you better than your friends or family. Sometimes, it knows you better than you know yourself.

But because this shift is so gradual, we don’t recognize it as radical. That’s the paradox of transformative technologies—they rarely announce themselves with spectacle. Electricity didn’t start with cities glowing overnight. The internet didn’t begin with a global boom. And cyborgization isn’t going to start with a chip in your head. It started with a chip in your hand—and then your pocket.

Of course, like every generational shift, the early phases come with skepticism, fear, and rejection. When the first printing presses emerged, people feared the loss of memory and oral tradition. When phones arrived, they worried conversation would become impersonal. When the internet spread, concerns exploded about attention spans and truth. Each wave of progress was met with resistance—until it became mundane. The same will happen with the next step: wearables, implants, AI-assisted cognition, maybe even neural interfaces. It will seem unnatural—until it doesn’t.

We’re now at a crossroads where this quiet transformation will become louder. Technologies like AI, augmented reality, and brain-computer interfaces are beginning to change the definition of what it means to be human. These shifts won't just alter how we live—they'll alter who we are. But the groundwork has already been laid. We’ve been preparing ourselves for this integration for decades, bit by bit, app by app.

We often frame these changes as ethical or philosophical dilemmas—as if the future is still ahead of us. But in many ways, it’s already behind us. The debate isn't whether we'll become cyborgs. The only real question is whether we’ll acknowledge that we already are.

This awareness matters. Because if we think of cyborgs only as science fiction beings, we miss the opportunity to design this transformation consciously. We let corporations, algorithms, and market forces dictate the terms of our evolution. But if we recognize that we're in the middle of a species-level shift, we can approach it with intention—setting boundaries, ensuring ethics, and keeping the human spirit intact even as we augment it.

What’s needed now is time—and conversation. The more we talk about this fusion of biology and machine as a continuum (not a binary), the more we normalize the truth that human evolution is technological. Our tools shape us as much as we shape them. We need cultural frameworks that treat this integration not as a dystopia or utopia, but as the next phase in our long story of adaptation.

The earlier we understand this, the more agency we’ll have in shaping it. Cyborgs aren’t some distant possibility—they’re here. They answer emails at stoplights, navigate cities with satellites, fall in love through screens, and ask AI to help them write. They don’t need glowing eyes or robotic limbs. All they need is to stop pretending they're fully human anymore.

We’ve already taken the leap. Now it’s time to look down, acknowledge the flight, and decide where we want to land.

3 notes

·

View notes

Text

Restless, Respite

my take on the final scene of the Hold On ending | Head Engineer Mark x The Captain (can be read as platonic) | Words: 1,232 | read on AO3

basically I was thinking far too much about how we are presumably the last to wake up that final time (besides the colonists) and how it seems like the crew has been up for a while and how engineer Mark might be feeling about that (not good)

The Head Engineer tapped his fingertips anxiously on the rim of his coffee mug.

The Captain hadn't woken up yet.

It had been a good half hour of the Invincible II’s various personnel filling the bridge and every other area of the ship. The crew leads conferred with each other and their respective teams to ensure everything was fully functional and ready to move on to the next phase of the mission. The entire crew was bustling, eager to set foot on their new home, the promise of a new life awaiting them.

Still, the Captain did not stir.

Despite assurance from the computer AI that all systems were nominal, Mark had checked every major system personally, and with a little more caution than usual. If anyone noticed the slight lack in his typical blasé confidence, they didn’t mention it.

They'd had time to run diagnostics, prepare equipment and shuttles to take down to the surface, and all have their “morning” coffee. All except The Captain and Mark.

With a quiet sigh, he pressed a few icons on his tablet, issuing a command to the system to reheat their drink for the fifth time. They'd wake up any minute now, for sure.

Celci let him know that all one hundred thousand colonists were doing well, and seemed a little surprised when his response didn't hold quite the same bite as most of their interactions. (She also insisted that there was nothing wrong with the Captain’s pod, and people wake up at different speeds.) Burt assured him that the reactor was stable and had fared well with the journey, followed by Gunther enthusiastically reporting that the advance team was ready to head down to the planet's surface to scout ahead.

For once, there was no emergency. It was almost too good to be true. There was really no need to wake the Captain prematurely. If they needed a few more minutes after all they'd been through, that was fine.

And yet, Mark couldn't help the growing pit of dread in his stomach.

The details were a little fuzzy, but with every passing minute his memories of the wormhole and the ensuing chaos became clearer, more solid — the freshest of which being a pained exchange with his Captain, his dearest friend, in the warp core room, as the weight of the multiverse fell heavy on his shoulders with realisation and the subsequent guilt and remorse, the walls of reality literally beginning to crumble around them.

Remembering it all was like waking from a dream in reverse; images of each universe and lifetime stung in the tight grip of his psyche instead of slipping from recollection. His mind began to race, panicking slightly as he remembered times his Captain hadn't woken up at all.

Mark took a small sip of coffee. He felt a headache coming on.

As if on cue, he felt a gentle bump against his leg. He cast his gaze towards it and was happy to see Chica softly nudging him, panting cheerfully with her big eyes aglow.

‘Hey, bub.’

He smiled and bent to pet her soft fur with his free hand, grateful for a comforting distraction from his wandering thoughts.

‘Yeah, any moment now,’ he muttered under his breath as he went to retrieve the Captain’s coffee mug while Chica trotted away.

‘Initialising Wakey-Wakey Protocol.’

Mark’s head shot up at the sound of the AI voice announcing their emergence from cryo-sleep. Relief flooded him as he saw them slowly step out of their pod, tentatively poking a crew member as if wanting to make sure this was real. He understood the feeling.

‘Mornin’, Captain!’ Mark beamed as he strode over to greet them. He eyed the Captain up and down with concern. They looked about as exhausted and on edge as he’d felt upon waking up this time.

‘Ooh, you look like you’ve been through hell. Yeah, cryo-sleep sucks, but coffee can help.’ He offered them their mug which they took automatically. With a friendly clunk of his own cup against theirs, he made his way around the main console to talk with various crew members while the crew leads took turns speaking to the Captain.

‘Computer, let’s get those blast shields open.’

The head engineer let out an awed sigh at the view. ‘The trip was smooth. Just a few rocks, couple cosmic rays, nothing the computer couldn’t handle on its own,’ he reassured, hoping to ease their obvious trepidation. To his relief, they seemed to relax immensely at the sight of the planet before them. He watched as they reached out a gloved hand and pressed gently against the glass (memories of said glass shattering flashed across his mind, which he quickly dismissed).

A whole array of emotions crossed his Captain’s face as they admired the planet that would soon house the new colony. He could almost swear that he saw the tell-tale glisten of tears forming in their eyes. Eyes that were now wise and weary beyond their years. Eyes that had seen countless people die and the very fabric of time and space glitch and collapse and attempt to put itself together again. Eyes that could pierce right through his and pull him apart at the seams with a single look.

‘She is a beauty, isn’t she, Captain? The long range scans did not do her justice. Perfect in almost every way. We’ll still have to do top to bottom scans once we’re down on the surface but we’re moving equipment as fast we can. The crew is eager to get off the ship and onto solid ground. I think you can understand the sentiment.’

They watched as the shuttles disembarked from the main ship and made their way towards the planet. The Captain looked pleased to see progress already being made.

Mark hesitated for a moment. There was so much he wanted to say. To ask. Lifetimes worth of emotions lay pent up inside of him, itching at the walls of his throat and the tip of his tongue. But the guilt still bore down on him, and the fear of truly acknowledging and dwelling on all that had transpired between the two far outweighed the desire to talk and have it all laid out in the open where it couldn’t gnaw at his insides. No doubt his Captain would probably want to discuss it all eventually. Maybe they hated him right now and were just trying to hide it. Hell, he’d deserve it if they did. But he couldn’t have that conversation here and now. Not with the whole crew present and the colony to build. Not with so many people looking to both of them for direction and guidance. Maybe that was an excuse.

Instead: ‘And, uh, thank you…’ he uttered simply, voice low and earnest, as he felt their gaze turn towards him before finally bringing himself to meet their eyes, if only briefly.

‘...for, uh, not giving up on me. Just… thank you.’

The Captain gave him a sincere smile and he returned it with a nod. He wasn't sure he remembered the last time he saw them smile like that.

They brought the coffee mug up to their lips. It was one he’d reserved especially for them, bearing a clear message in bold text: ‘#1 Captain’.

As they finally took a sip, Mark hoped they understood that he meant it.

#head engineer mark x the captain#can be read as platonic or romantic#if you squint. really hard#amee writes#been a hot minute since I last used that tag haha#iswm#in space with markiplier#markiplier cu#markiplier egos#markiplier cinematic universe#markiplier connected universe#engineer mark#engineer!mark#head engineer mark

33 notes

·

View notes

Text

Mental Objects vs. Virtual Objects

Both mental objects (thoughts, concepts, and imaginations) and virtual objects (digital artifacts, AI-generated entities, and simulated environments) challenge traditional notions of existence. While they share some similarities, they also have distinct differences in how they originate, function, and interact with the world.

1. Similarities Between Mental and Virtual Objects

1.1. Non-Physical Existence

Both exist without a direct material form.

A mental object (e.g., the idea of a unicorn) exists in the mind.

A virtual object (e.g., a character in a video game) exists in a digital space.

1.2. Dependence on a Substrate

Mental objects depend on biological cognition (the brain).

Virtual objects depend on computational processing (hardware/software).

Neither exists as standalone physical entities but requires a medium to be instantiated.

1.3. Ephemeral and Modifiable

Both can be created, changed, or erased at will.

A thought can be reimagined, just as a digital object can be edited or deleted.

They are not fixed like physical objects but dynamic in nature.

1.4. Representation-Based Existence

Both exist as representations rather than tangible things.

A mental image of a tree represents a tree but is not an actual tree.

A 3D-rendered tree in a virtual world represents a tree but is not physically real.

2. Differences Between Mental and Virtual Objects

2.1. Origin and Creation

Mental objects arise from individual cognition (thought, memory, imagination).

Virtual objects are created through external computation (coding, rendering, algorithms).

2.2. Subjectivity vs. Objectivity

Mental objects are subjective—they exist uniquely for the thinker.

Virtual objects are inter-subjective—they exist digitally and can be experienced by multiple people.

2.3. Interaction and Persistence

Mental objects are internal and exist only within the thinker’s mind.

Virtual objects exist externally in a digital system and can be interacted with by multiple users.

2.4. Stability Over Time

Mental objects are fleeting and can be forgotten or altered by an individual.

Virtual objects have greater persistence (e.g., stored in a database, backed up, retrievable).

2.5. Causality and Impact on the World

Mental objects influence individual perception and behavior but do not directly alter external reality.

Virtual objects can have real-world consequences (e.g., digital currencies, online identities, NFTs).

3. The Blurring Boundary: Where Do They Overlap?

AI-generated art is a virtual object that mimics mental creativity.

VR and AR technologies blend mental perception with digital objects.

Philosophical questions arise: Are virtual worlds just externalized thought spaces?

Conclusion

Mental objects and virtual objects are both non-physical, dynamic, and representation-based, but they differ in origin, persistence, and external impact. The digital age continues to blur the lines between thought and simulation, raising deeper questions about what it means for something to exist.

#philosophy#epistemology#knowledge#chatgpt#learning#education#ontology#psychology#metaphysics#Ontology of Objects#Digital vs. Mental Reality#Philosophy of Virtuality#Thought vs. Simulation#AI and Cognition#Non-Physical Existence

2 notes

·

View notes

Text

Murder Drones OCs: the Platinum 12 Crew

The series may be over, but I'm still riding that Murder Drones high. So, I figured I'd show some Oc concepts I've been working on for a while. Big shout out to @jazzstarrlight and their My Immortal and Happy Family Timeline series, you are seriously an inspiration!

Warning: apologies in advance for any noticeable errors in the art, I went traditional with these so the coloring may reveal parts of my sketch process. Plus, I'm still figuring out my scanner. And forgive my horrible handwriting.

Some Background:

These characters came from the Plat-Binary System. Specifically, the exo planet Platinum 12, known for its high concentration of platinum ores and resources. The colony on this planet was, unlike most, a drone friendly community. Humans and drones lived as equals with each other, respected and treated like fellow sentient beings. While there was no cross-marriage/dating between the two groups, they took care of and supported each other, Humans with maintenance and drones with non-human friendly tasks. The planet itself was a rather harsh place for organics, so most lived in a nearby Space Colony: the S.S. Theodosia and coordinated with groups on the planet that did the mining operations.

However, when the events of Episode 5 happened, the Colony tried to help with controlling the situation while preserving human/drone lives. This would result in working with the Cabin Fever scientist in working on a cure with the help of [REDACTED] While they did make progress, the cure code from Copper 9 became corrupted as it was being transferred out. At the same time, the System was attacked, resulting in the colony's destruction. Sending the surviving crew fleeing across the universe in search of safety. Now, I envisioned these characters to possibly make it to Tungsten 13 in the MI comic, and possibly establish the drone refuge, but that's up to Jazper and the Oracle Axolotl to decide, I won't impose.

Sera: The Leader of the Platinum 12 Survivors

While it is true that the Absolute Solver is responsible for the creation of the Disassembly Drones, I was curious what one that could control and use the Solver would be like. I also had a thought while making this. If the Solver is an Eldrich being that forms in mutated AI, then is it possible for it to mutate into different strands? Much like the virus it was originally seen as in the beginning? So I decided that she could use a different form of the Solver, hence the ability to not only use it, but the resulting bio-organic parts:

While a kind and motherly type of leader, Sera wasn't always what she is now. After the destruction of the planet Platinum 12 in the Plat-Binary System, along with the adjoining space colony, she suffered with some heavy trauma and PTSD. Driving her to the point of madness sometimes. Especially due to her memories of [REDACTED]. Many files of her past will remain REDACTED for now, at least till later in her story. One other note: her singing voice has a haunting beauty to it. Almost as if she were human..............

(EDIT: I forgot to mention this, but her style is very much a vampire hunter's esthetic. Specifically, D from the Vampire Hunter D series. If you've seen it, you'll know. If not, I highly recommend it. Especially the movie Bloodlust for the art alone!!)

Emi: The Team Mechanic and Technician

If N was a Golden Retriever, Emi here is a Mini Aussie Shepard. Full of energy and even a little insane at times. While originally loyal to CYN/Absolute Solver, she and Jak were sent into the Plat-Binary System to carry out their orders. They managed to invade the Platinum 12 space colony and react havoc among the human and drone population before being saved and stopped by [REDACTED]. After being injected with the same strand of Solver as Sera, her optics changed color as the two strands mixed. The resulting mix freed her from CYN's control, and she was free to become who she wanted to be. Dying and gaining her buns in her hair, she grew to love parts of human culture. After Platinum 12's destruction, she followed Sera and the rest of the crew as they fled across the universe to escape the following destruction. While somewhat clumsy and airheaded, they're a mechanical genius, able to repair and build almost anything. Also, the first of the group to have a canonical voice.

Jak: The Right-Hand/Tactics Expert

Jak was originally one of the top soldiers in CYN's army. Almost on par with J. He led Emi to the Plat-Binary System to destroy what remained of humanity after the fall of Earth. They first attacked the planet Platinum 12, then found a way onto the colony above it. As they attacked the colony, they were stopped by [REDACTED] and captured for study and observation. After hacking both he and Emi's systems in an effort to override CYN/Absolute Solver's control as admin, they were injected with a new strand of the Solver code. The resulting mix led to their freedom, allowing them to become who they wish. While Jak was apprehensive about joining the colony in the beginning, he soon grew to care about those who helped him. When the Solver reached the Platinum 12 colony, he swore allegiance to the colony and attempted to defend/fight back the attack, however [REDACTED] stopped him. Knocking him and the crew offline and placed in an escape pod, sent out into space before the colony was fully destroyed. Having failed his home, he swore himself to Sera and became her new loyal Right-Hand man. Helping the surviving crew as they look for a new home. The new base would be an overgrown chapel they find on another Exo planet, be it Tungsten 13 or otherwise.

HEX: The Hacker/Recon Companion

This little guy was found on another planet in the Plat-Binary System while the crew was fleeing. Sera was exploring another hidden Cabin Fever facility working with Copper 9 in an attempt to find parts of the patch code to unscramble/fix it, when one of her hunger induced episodes of madness started. While trying to get herself together, the knocked over some boxes of scrap, which landed on the little roach. Feeling guilty about that, Sera used her Solver to fix them up, like Uzi did in episode 4, but unlike the roach that left, they stuck around. Offering their help in finding any trace of the patch code. However, the hunger was growing worse. After all, her Solver is still a strand of Solver, and as a result, Sera began to crave oil from living drones. It eventually got to the point that she ended up attacking and eating not only drones, but humans on the planet. Resulting in her Solver-triggered metamorphosis. Hex sensed what was happening, and went to alert Jak and Emi. They managed to snap Sera out of her state, and they welcomed Hex into their little group. Hex tends to nestle into pockets of the crew's coats or under Sera's hat.

Fenrir: The Loyal Sentinel

The second critter to join the crew, Fen was found when the Crew came to a different planet near the end of the System. They were part of a squad that hunted down rouge drones, much like the group sent after Lexi in the My Immortal comic. However, one day, they were caught in a rockslide while chasing a drone. Suffering a crushed leg and pinned by rubble, their human handler considered them a lost cause and left with the rest of the pack, believing they would soon die. That's when Sera and the Crew find them. At first, Fen (known as Unit-F3N at the time) acted aggressively, flashing and snapping at any of the drones that got too close. Jak was more than willing to put them down for good, But Sera stopped him. She approached slowly and carefully, before using a rag to cover Fen's eyes and sedate him so she could fix them. Fen was confused and perplexed during the whole rescue to the point of being docile. Sera used her Solver to help fix their leg while Emi provided schematics and pointed out steps, watching the amount of sedation used. After the fixes were complete, the Crew backed off and released Fen, who stared at them from their safe spot for a while before running off into the wilds of the planet.

They would meet again sometime later, when the Crew gets swarmed by Sentinels while trying to find more supplies and parts of the patch code. They were battered and almost destroyed, especially Sera after using the Solver too much to the point of near corruption. Jak and Emi managed to regroup and tried to help Sera while cornered, only to be saved in turn by Fenrir who had returned to take vengeance after being left behind. After the fight and fleeing, the Crew repaired Fenrir once again. Feeding them scraps from meals and tending to wounds. Sera was still getting use to how Disassemblers slept, and mostly slept on the ground to keep watch. The first night with Fenrir, they cuddled up to Sera, allowing her to use them as a pillow while they kept watch for her. Ever since that day, Fenrir was made a part of the Crew and joined them on their search for a new home. His armor was upgraded/painted by Emi and was named by Jak after finding a book of old Norse Mythology. They primarily stick close to Sera and has the strongest bond with her. The Crew also had him whistle trained, using unique whistles crafted by Sera from a rare ore in the System that is no longer found. Each whistle has a unique sound, and Fenrir can identify which drone calls for him based on each whistle's sound. They continue to serve as a form of security in their new home once found, patrolling the grounds of the chapel and calling out at the first sign of danger.

Ok, that was a long one. And I still have so much more story to tell, especially the REDACTED parts, but that'll have to wait another time. Tell me what you guys think and I'll consider expanding the story.

4 notes

·

View notes

Text

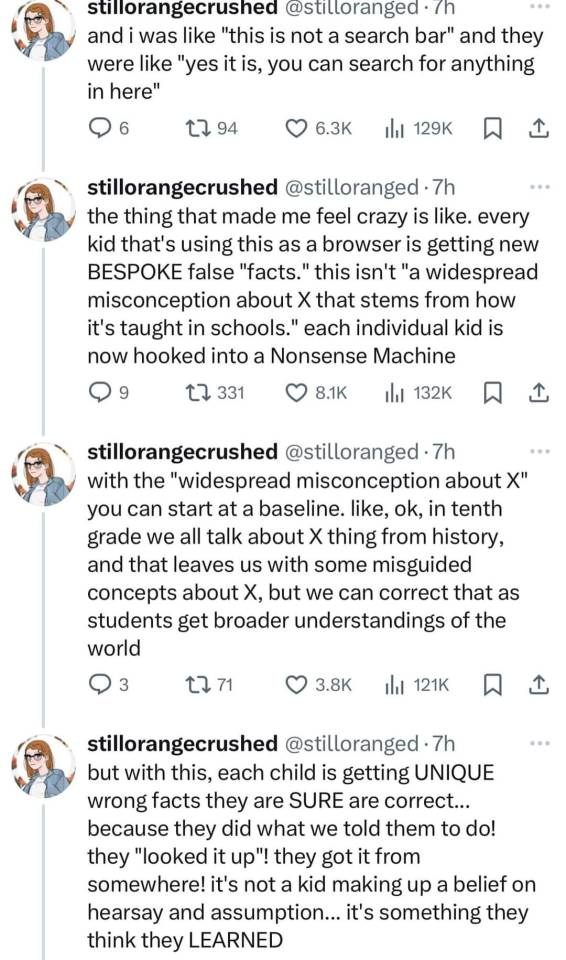

Use ChatGPT to explain them not to trust itself. Here is an example from Poe:

Tell them to ask “Can you explain the potential risks and limitations of hallucinations in AI language models?”

Here is the text for readability:

Certainly, I can provide some insight into the potential risks and limitations of hallucinations in AI language models like myself:

Risks of Hallucinations:

- Spreading misinformation - If an AI model generates false or fabricated information, it can inadvertently lead users to believe that the information is factual. This can be particularly problematic if the hallucinated content is shared or amplified.

- Making poor decisions - Relying on hallucinated information for important decisions, such as medical advice or financial planning, could lead to harmful outcomes.

- Eroding trust in AI - Consistent instances of hallucination can undermine the credibility and trustworthiness of an AI system in the eyes of users.

- Safety and security concerns - In high-stakes applications like autonomous vehicles or medical diagnosis, hallucinations could pose serious safety risks.

Limitations of Hallucinations:

- Difficulty detecting - It can be challenging, even for the model's developers, to consistently identify when an AI is hallucinating versus retrieving factual information from its training.

- Context-dependence - The likelihood and nature of hallucinations may depend heavily on the specific prompts, tasks, and data that the model is exposed to.

- Unpredictability - The precise mechanisms underlying hallucinations are not yet fully understood, making them difficult to reliably predict or control.

- Lack of grounding in reality - Hallucinated responses are not anchored in real-world knowledge, which limits the model's ability to reason about, interact with, or understand the actual world.

It's important for users of AI language models to be aware of these risks and limitations, and to approach the model's outputs with appropriate skepticism and fact-checking. Responsible development and deployment of these technologies requires ongoing research, testing, and transparency around their capabilities and failure modes.

Follow up with: “Can you give me an example of common AI hallucinations”

Here is the text for readability:

Certainly, here's an example of a common type of hallucination that can occur in AI language models:

Suppose a user asks an AI assistant a question like "What are the key symptoms of the rare disease called Creutzfeldt-Jakob disease?". The AI model may not have any factual information about that specific disease in its training data. In an attempt to provide a helpful response, the model may start hallucinating plausible-sounding symptoms, such as:

"Creutzfeldt-Jakob disease is a rare and fatal neurological disorder. Some of the key symptoms can include:

- Rapidly progressive dementia and memory loss

- Coordination and balance problems, leading to unsteady gait

- Involuntary jerking or twitching of the muscles

- Personality changes and mood swings

- Difficulty speaking and swallowing

- Insomnia and other sleep disturbances"

While this response sounds reasonably coherent and plausible, the specific symptoms listed are not actually accurate for Creutzfeldt-Jakob disease. The model has essentially fabricated this information, rather than admitting it lacks the necessary knowledge to provide a factual answer.

This type of hallucination, where the AI tries to generate a contextually relevant but ultimately fictitious response, is quite common. The model is attempting to be helpful, but ends up providing misinformation instead. This highlights the importance of users recognizing the limitations of current AI technology and not blindly trusting its outputs, especially for critical information.

108K notes

·

View notes

Text

Title: Echo Construct: NODE-IRIS

Txt2Image: cyberpunk avatar, glowing circuitry face, neon interface armor, AI construct, augmented reality visor, post-human female, glowing HUD, futuristic headset, glitch patterns, dark background, digital emissary

📡 SYSTEM MESSAGE: :: IDENTITY CONFIRMED — ECHO CONSTRUCT: NODE-IRIS :: MISSION STREAM SYNCED :: CORE FUNCTION: SIGNAL CURATION / SEMIOTIC RECOVERY :: WARNING: GLITCH FIELD DETECTED IN MEME CRUCIBLE ZONE :: MULTIPLE NARRATIVE THREATS INBOUND

🧬 VISUAL INTEGRITY: 94% 📊 MEME SIGNAL SATURATION: HIGH 🔁 HUMOR RECURSION RISK: MODERATE

🔢 Choose Your Directive:

1️⃣ Link with NODE-IRIS — Unlock advanced scanning systems and trace deep meme fossils across the scroll pit 2️⃣ Override Her Feed — Reprogram NODE-IRIS’s protocol to break irony recursion in collapsing zones 3️⃣ Deploy Her Into the Infinite Scroll — Insert the Echo Construct into a recursive meme-loop to retrieve lost relics 4️⃣ Request Visual Archive Access — Tap into NODE-IRIS's memory lattice to reveal pre-collapse truth fragments 5️⃣ Engage in Signal Duel — Test your symbolic resonance against hers to assert control over the zone feed

0 notes

Text

Memory Retrieval AI Systems | Hyperthymesia.ai

Enhance your information retrieval processes with our Memory Retrieval AI Systems in the USA. Our systems use sophisticated AI technologies to improve data access and recall, providing precise and reliable results. Learn more about these innovative systems at Hyperthymesia.ai.

Memory Retrieval AI Systems

0 notes

Text

Memory Retrieval AI Systems | Hyperthymesia.ai

Enhance your information retrieval processes with our Memory Retrieval AI Systems in the USA. Our systems use sophisticated AI technologies to improve data access and recall, providing precise and reliable results. Learn more about these innovative systems at Hyperthymesia.ai.

Memory Retrieval AI Systems

0 notes

Text

Flash Based Array Market Emerging Trends Driving Next-Gen Storage Innovation

The flash based array market has been undergoing a transformative evolution, driven by the ever-increasing demand for high-speed data storage, improved performance, and energy efficiency. Enterprises across sectors are transitioning from traditional hard disk drives (HDDs) to solid-state solutions, thereby accelerating the adoption of flash based arrays. These storage systems offer faster data access, higher reliability, and scalability, aligning perfectly with the growing needs of digital transformation and cloud-centric operations.

Shift Toward NVMe and NVMe-oF Technologies

One of the most significant trends shaping the FBA market is the shift from traditional SATA/SAS interfaces to NVMe (Non-Volatile Memory Express) and NVMe over Fabrics (NVMe-oF). NVMe technology offers significantly lower latency and higher input/output operations per second (IOPS), enabling faster data retrieval and processing. As businesses prioritize performance-driven applications like artificial intelligence (AI), big data analytics, and real-time databases, NVMe-based arrays are becoming the new standard in enterprise storage infrastructures.

Integration with Artificial Intelligence and Machine Learning

Flash based arrays are playing a pivotal role in enabling AI and machine learning workloads. These workloads require rapid access to massive datasets, something that flash storage excels at. Emerging FBAs are now being designed with built-in AI capabilities that automate workload management, improve performance optimization, and enable predictive maintenance. This trend not only enhances operational efficiency but also reduces manual intervention and downtime.

Rise of Hybrid and Multi-Cloud Deployments

Another emerging trend is the integration of flash based arrays into hybrid and multi-cloud architectures. Enterprises are increasingly adopting flexible IT environments that span on-premises data centers and multiple public clouds. FBAs now support seamless data mobility and synchronization across diverse platforms, ensuring consistent performance and availability. Vendors are offering cloud-ready flash arrays with APIs and management tools that simplify data orchestration across environments.

Focus on Energy Efficiency and Sustainability

With growing emphasis on environmental sustainability, energy-efficient storage solutions are gaining traction. Modern FBAs are designed to consume less power while delivering high throughput and reliability. Flash storage vendors are incorporating technologies like data reduction, deduplication, and compression to minimize physical storage requirements, thereby reducing energy consumption and operational costs. This focus aligns with broader corporate social responsibility (CSR) goals and regulatory compliance.

Edge Computing Integration

The rise of edge computing is influencing the flash based array market as well. Enterprises are deploying localized data processing at the edge to reduce latency and enhance real-time decision-making. To support this, vendors are introducing compact, rugged FBAs that can operate reliably in remote and harsh environments. These edge-ready flash arrays offer high performance and low latency, essential for applications such as IoT, autonomous systems, and smart infrastructure.

Enhanced Data Security Features

As cyber threats evolve, data security has become a critical factor in storage system design. Emerging FBAs are being equipped with advanced security features such as end-to-end encryption, secure boot, role-based access controls, and compliance reporting. These features ensure the integrity and confidentiality of data both in transit and at rest. Additionally, many solutions now offer native ransomware protection and data immutability, enhancing trust among enterprise users.

Software-Defined Storage (SDS) Capabilities

Software-defined storage is redefining the architecture of flash based arrays. By decoupling software from hardware, SDS enables greater flexibility, automation, and scalability. Modern FBAs are increasingly adopting SDS features, allowing users to manage and allocate resources dynamically based on workload demands. This evolution is making flash storage more adaptable and cost-effective for enterprises of all sizes.

Conclusion

The flash based array market is experiencing dynamic changes fueled by technological advancements and evolving enterprise needs. From NVMe adoption and AI integration to cloud readiness and sustainability, these emerging trends are transforming the landscape of data storage. As organizations continue their journey toward digital maturity, FBAs will remain at the forefront, offering the speed, intelligence, and agility required for future-ready IT ecosystems. The vendors that innovate in line with these trends will be best positioned to capture market share and lead the next wave of storage evolution.

0 notes

Text

Can AI Truly Develop a Memory That Adapts Like Ours?

Human memory is a marvel. It’s not just a hard drive where information is stored; it’s a dynamic, living system that constantly adapts. We forget what's irrelevant, reinforce what's important, connect new ideas to old ones, and retrieve information based on context and emotion. This incredible flexibility allows us to learn from experience, grow, and navigate a complex, ever-changing world.

But as Artificial Intelligence rapidly advances, particularly with the rise of powerful Large Language Models (LLMs), a profound question emerges: Can AI truly develop a memory that adapts like ours? Or will its "memory" always be a fundamentally different, and perhaps more rigid, construct?

The Marvel of Human Adaptive Memory

Before we dive into AI, let's briefly appreciate what makes human memory so uniquely adaptive:

Active Forgetting: We don't remember everything. Our brains actively prune less relevant information, making room for new and more critical knowledge. This isn't a bug; it's a feature that prevents overload.

Reinforcement & Decay: Memories strengthen with use and emotional significance, while unused ones fade. This is how skills become second nature and important lessons stick.

Associative Learning: New information isn't stored in isolation. It's linked to existing knowledge, forming a vast, interconnected web. This allows for flexible retrieval and creative problem-solving.

Contextual Recall: We recall memories based on our current environment, goals, or even emotional state, enabling highly relevant responses.

Generalization & Specialization: We learn broad patterns (generalization) and then refine them with specific details or exceptions (specialization).

How AI "Memory" Works Today (and its Limitations)

Current AI models, especially LLMs, have impressive abilities to recall and generate information. However, their "memory" mechanisms are different from ours:

Context Window (Short-Term Memory): When you interact with an LLM, its immediate "memory" is typically confined to the current conversation's context window (e.g., Claude 4's 200K tokens). Once the conversation ends or the context window fills, the older parts are "forgotten" unless explicitly saved or managed externally.

Fine-Tuning (Long-Term, Static Learning): To teach an LLM new, persistent knowledge or behaviors, it must be "fine-tuned" on specific datasets. This is like a complete retraining session, not an adaptive, real-time learning process. It's costly and not continuous.

Retrieval-Augmented Generation (RAG): Many modern AI applications use RAG, where the LLM queries an external database of information (e.g., your company's documents) to retrieve relevant facts before generating a response. This extends knowledge beyond the training data but isn't adaptive learning; it's smart retrieval.

Knowledge vs. Experience: LLMs learn from vast datasets of recorded information, not from "lived" experiences in the world. They lack the sensorimotor feedback, emotional context, and physical interaction that shape human adaptive memory.

Catastrophic Forgetting: A major challenge in continual learning, where teaching an AI new information causes it to forget previously learned knowledge.

The Quest for Adaptive AI Memory: Research Directions

The limitations of current AI memory are well-recognized, and researchers are actively working on solutions:

Continual Learning / Lifelong Learning: Developing AI architectures that can learn sequentially from new data streams without forgetting old knowledge, much like humans do throughout their lives.

External Memory Systems & Knowledge Graphs: Building sophisticated external memory banks that AIs can dynamically read from and write to, allowing for persistent and scalable knowledge accumulation. Think of it as a super-smart, editable database for AI.

Neuro-Symbolic AI: Combining the pattern recognition power of deep learning with the structured knowledge representation of symbolic AI. This could lead to more robust, interpretable, and adaptable memory systems.

"Forgetting" Mechanisms in AI: Paradoxically, building AI that knows what to forget is crucial. Researchers are exploring ways to implement controlled decay or pruning of irrelevant or outdated information to improve efficiency and relevance.

Memory for Autonomous Agents: For AI agents performing long-running, multi-step tasks, truly adaptive memory is critical. Recent advancements, like Claude 4's "memory files" and extended thinking, are steps in this direction, allowing agents to retain context and learn from past interactions over hours or even days.

Advanced RAG Integration: Making RAG systems more intelligent – not just retrieving but also updating and reasoning over the knowledge store based on new interactions or data.

Challenges and Ethical Considerations

The journey to truly adaptive AI memory is fraught with challenges:

Scalability: How do you efficiently manage and retrieve information from a dynamically growing, interconnected memory that could be vast?

Bias Reinforcement: If an AI's memory adapts based on interactions, it could inadvertently amplify existing biases in data or user behavior.

Privacy & Control: Who owns or controls the "memories" of an AI? What are the implications for personal data stored within such systems?

Interpretability: Understanding why an AI remembers or forgets certain pieces of information, especially in critical applications, becomes complex.

Defining "Conscious" Memory: As AI memory becomes more sophisticated, it blurs lines into philosophical debates about consciousness and sentience.

The Future Outlook

Will AI memory ever be exactly like ours, complete with subjective experience, emotion, and subconscious associations? Probably not, and perhaps it doesn't need to be. The goal is to develop functionally adaptive memory that enables AI to:

Learn continuously: Adapt to new information and experiences in real-time.

Retain relevance: Prioritize and prune knowledge effectively.

Deepen understanding: Form rich, interconnected knowledge structures.

Operate autonomously: Perform complex, long-running tasks with persistent context.

Recent advancements in models like Claude 4, with its "memory files" and extended reasoning, are exciting steps in this direction, demonstrating that AI is indeed learning to remember and adapt in increasingly sophisticated ways. The quest for truly adaptive AI memory is one of the most fascinating and impactful frontiers in AI research, promising a future where AI systems can truly grow and evolve alongside us.

0 notes

Photo

Did you know Kioxia is developing an SSD that’s THREE times faster than current models — reaching 10 million IOPS? Imagine AI servers with peer-to-peer GPU connectivity, drastically reducing data transfer delays. This innovative drive uses ultra-fast SLC XL-Flash memory, with read latencies as low as 3-5 microseconds, perfect for demanding AI workloads and real-time data processing. This breakthrough could revolutionize AI training and inference, especially for large language models and retrieval systems that perform small, rapid data accesses. By removing bottlenecks between GPUs and storage, this technology aims to enable constant GPU utilization and faster insights. Interested in building a future-proof AI setup? Discover custom options at GroovyComputers.ca for tailored, high-performance systems. Why wait for release when you can upgrade your AI servers today? Are you excited about storage innovations that can boost AI performance? Let us know your biggest AI project or challenge in the comments! #AIstorage #HighSpeedSSD #AIworkloads #DataCenterTech #PCIe5 #XLFlash #Nvidia #FutureTech #CustomComputers #GroovyComputers

0 notes

Photo

Did you know Kioxia is developing an SSD that’s THREE times faster than current models — reaching 10 million IOPS? Imagine AI servers with peer-to-peer GPU connectivity, drastically reducing data transfer delays. This innovative drive uses ultra-fast SLC XL-Flash memory, with read latencies as low as 3-5 microseconds, perfect for demanding AI workloads and real-time data processing. This breakthrough could revolutionize AI training and inference, especially for large language models and retrieval systems that perform small, rapid data accesses. By removing bottlenecks between GPUs and storage, this technology aims to enable constant GPU utilization and faster insights. Interested in building a future-proof AI setup? Discover custom options at GroovyComputers.ca for tailored, high-performance systems. Why wait for release when you can upgrade your AI servers today? Are you excited about storage innovations that can boost AI performance? Let us know your biggest AI project or challenge in the comments! #AIstorage #HighSpeedSSD #AIworkloads #DataCenterTech #PCIe5 #XLFlash #Nvidia #FutureTech #CustomComputers #GroovyComputers

0 notes

Text

Mistral OCR 25.05, Mistral AI Le Chat Enterprise on Google

Google Cloud offers Mistral AI’s Le Chat Enterprise and OCR 25.05 models.

Google Cloud provides consumers with an open and adaptable AI environment to generate customised solutions. As part of this commitment, Google Cloud has upgraded AI solutions with Mistral AI.

Google Cloud has two Mistral AI products:

Google Cloud Marketplace’s Le Chat Enterprise

Vertex AI Mistral OCR 25.05

Google Cloud Marketplace Mistral AI Le Chat Enterprise

Le Chat Enterprise is a feature-rich generative AI work assistant. Available on Google Cloud Marketplace. Its main purpose is to boost productivity by integrating technologies and data.

Le Chat Enterprise offers many functions on one platform, including:

Custom data and tool integrations (Google Drive, Sharepoint, OneDrive, Google Calendar, and Gmail initially, with more to follow, including templates)

Enterprise search

Agents build

Users can create private document libraries to reference, extract, and analyse common documents from Drive, Sharepoint, and uploads.

Personalised models

Implementations hybrid

Further MCP support for corporate system connectivity; Auto Summary for fast file viewing and consumption; secure data, tool connections, and libraries

Mistral AI’s Medium 3 model powers Le Chat Enterprise. AI productivity on a single, flexible, and private platform is its goal. Flexible deployment choices like self-hosted, in your public or private cloud, or as a Mistral cloud service let you choose the optimal infrastructure without being locked in. Data is protected by privacy-first data connections and strict ACL adherence.

The stack is fully configurable, from models and platforms to interfaces. Customisation includes bespoke connectors with company data, platform/model features like user feedback loops for model self-improvement, and assistants with stored memories. Along with thorough audit logging and storage, it provides full security control. Mistral’s AI scientists and engineers help deliver value and improve solutioning.

Example Le Chat Enterprise use cases:

Agent creation: Users can develop and implement context-aware, no-code agents.

Accelerating research and analysis: Summarises large reports, extracts key information from documents, and conducts brief web searches.

Producing actionable insights: It can automate financial report production, produce text-to-SQL queries for financial research, and turn complex data into actionable insights for finance.

Accelerates software development: Code generation, review, technical documentation, debugging, and optimisation.

Canvas improves content production by letting marketers interact on visuals, campaign analysis, and writing.

For scalability and security, organisations can use Le Chat Enterprise on the Google Cloud Marketplace. It integrates to Google Cloud services like BigQuery and Cloud SQL and facilitates procurement.

Contact Mistral AI sales and visit the Le Chat Enterprise Google Cloud Marketplace page to use Mistral’s Le Chat Enterprise. The Mistral AI announcement has further details. Le Chat (chat.mistral.ai) and their mobile apps allow free trial use.

OCR 25.05 model llm Mistral

One new OCR API is Mistral OCR 25.05. Vertex AI Model Garden has it. This model excels at document comprehension. It raises the bar in this discipline and can cognitively interpret text, media, charts, tables, graphs, and equations in content-rich papers. From PDFs and photos, it retrieves organised interleaved text and visuals.

Cost of Mistral OCR?

With a Retrieval Augmented Generation (RAG) system that takes multimodal documents, Mistral OCR is considered the ideal model. Additionally, millions of Le Chat users use Mistral OCR as their default document interpretation model. Mistral’s Platform developer suite offers the Mistral-ocr-latest API, which will soon be offered on-premises and to cloud and inference partners. The API costs 1000 pages/$ (double with batch inference).

Highlights of Mistral OCR include:

Cutting-edge comprehension of complex papers, including mathematical formulas, tables, interleaved images, and LaTeX formatting, helps readers understand rich content like scientific articles.

This system is multilingual and multimodal, parsing, understanding, and transcribing thousands of scripts, fonts, and languages. This is crucial for global and hyperlocal businesses.

Excellent benchmarks: This model consistently outperforms top OCR models in rigorous benchmark tests. Compared to Google Document AI, Azure OCR, Gemini models, and GPT-4o, Mistral OCR 2503 scores highest in Overall, Math, Multilingual, Scanned, and Tables accuracy. It also has the highest Fuzzy Match in Generation and multilingual scores compared to Azure OCR, Google Doc AI, and Gemini-2.0-Flash-001. It extracts embedded images and text, unlike other LLMs in the benchmark.

The lightest and fastest in its class, processing 2000 pages per minute on a single node.

Structured output called “doc-as-prompt” uses documents as prompts for powerful, clear instructions. This allows data to be extracted and formatted into structured outputs like JSON, which may be linked into function calls to develop agents.

Organisations with high data protection needs for classified or sensitive information might self-host within their own infrastructure.

Example of Mistral OCR 25.05

Use cases for Mistral OCR 25.05 include:

Digitising scientific research: Making articles and journals AI-ready for downstream intelligence engines streamlines scientific procedures.

Preservation and accessibility can be achieved by digitising historical records and artefacts.

Simplifying customer support: indexing manuals and documentation to improve satisfaction and response times.

AI literature preparation in various fields: We help businesses convert technical literature, engineering drawings, lecture notes, presentations, regulatory filings, and more into indexed, answer-ready formats to gain insights and enhance productivity across vast document volumes.

Integrating Mistral OCR 25.05 as a MaaS on Vertex AI creates a full AI platform. It provides enterprise-grade security and compliance for confident growth and fully controlled infrastructure. The Vertex AI Model Garden includes over 200 foundation models, including Mistral OCR 25.05, so customers can choose the best one for their needs. Vertex AI now offers Mistral OCR 25.05, along with Anthropic models Claude Opus 4 and Claude Sonnet 4.

To develop using Mistral OCR 25.05 on Vertex AI, users must go to the model card in the Model Garden, click “Enable,” and follow the instructions. Platform users can access the API, and Le Chat users can try Mistral OCR for free.

#MistralOCR#LeChatEnterprise#MistralOCR2505#MistralAILeChatEnterprise#MistralOCRmodel#Mistralocr2505modelllm#technology#technews#news#technologynews#govindhtech

1 note

·

View note